Avatar-based sign language translation

The sign language avatar for municipalities and companies was implemented on the basis of the research project AVASAG (Avatar-based Language Assistant for Automated Sign Language Translation) for the “travel” data area. For further development, the content in the corpus data area and vocabulary is being expanded beyond the travel context to include official and business communication.

The following video presents the AVASAG research project, which deals with the automated translation of spoken or written text into sign language using an avatar. The virtual avatar serves as a signing figure and enables deaf or hearing-impaired people to access content that would otherwise only be available in spoken language.

Digital communication is subject to rapid change, but not everyone is able to keep up. There are approximately 70 million deaf people worldwide, for most of whom text language is like a foreign language. Digital accessibility is therefore becoming increasingly important in order to communicate content dynamically and in a way that is suitable for all target groups. Developments in human-technology interaction support barrier-free communication. Barrier-free digital content is necessary so that everyone can take advantage of today's technical possibilities and thus achieve comprehensive digital participation. Public authorities and companies should be enabled to fulfill their legal obligations.

In the joint project AVASAG (Avatar-based Language Assistant for Automated Sign Language Translation) funded by the Federal Ministry of Education and Research (BMBF), six partners from research and development are developing a novel real-time sign language animation process for 3D avatars. Texts are translated into sign language by combining machine learning methods with rule-based synthesis methods. This is done with precise resolution of the temporal and spatial dependencies of the sign elements for high-quality results:

- Input text (e.g., in German) is converted into a structural representation, which is then translated into sign language.

- The avatar displays typical sign language gestures and body and facial movements that are essential for comprehensibility in sign language.

- In sign language, it is not only the hands that count; facial expressions, body posture, and spatial elements also play an important role.

One of the project's goals is to make translations as fluid and natural as possible, i.e., not rigid or robotic, so that people who use sign language can easily understand the presentation.

Technically complex aspects include, for example, the collection of useful data, the use of the latest technologies for recognizing facial expressions and movements, the preparation of training data for machine learning and artificial intelligence, real-time data generation, and the animation of the avatar, taking into account various characteristics such as expression, emotionality, synchronicity, and accuracy.

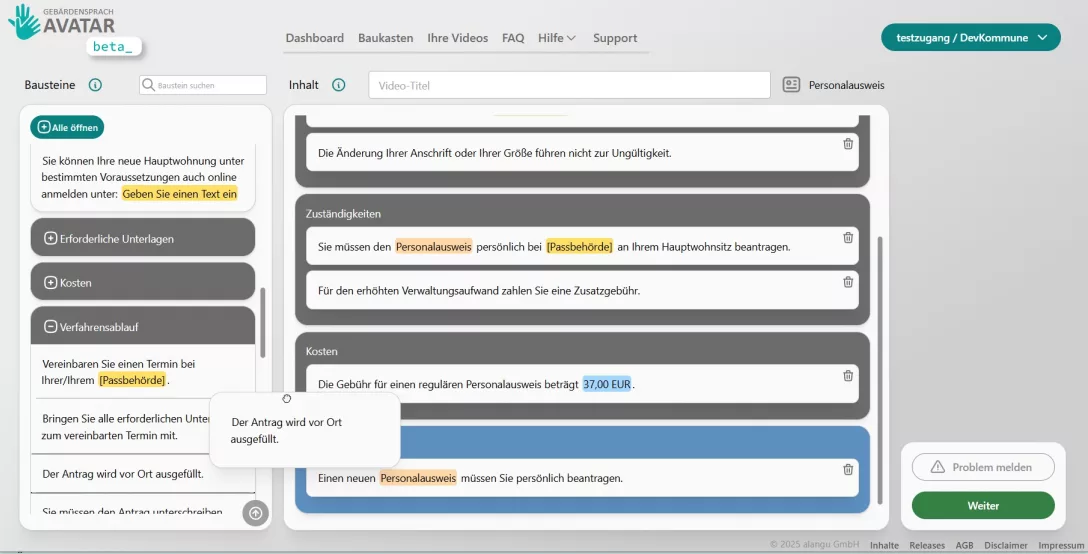

Possible application scenarios for the web, kiosks, and mobile devices include websites, government agencies, educational institutions, and any videos or other content that needs to be made accessible in sign language.

For updates on our latest developments:

Also interesting:

How is a sign language video created?

How does quality assurance work at alangu?

How do we teach our AI sign language?